Fast Gradient method with Armijo Rule¶

[17]:

import numpy as np

from IPython.display import display, Image, HTML

display(HTML("""

<style>

.output {

display: flex;

align-items: center;

text-align: center;

}

</style>

"""))

Quadratic Function¶

This function is a polynomial of degree 2.

The Quadratic function \(r: \mathbb{R}^n \rightarrow \mathbb{R}\) is given by:

\[f(x) = \frac{1}{2} x^T Q x + b^T x + c\]

[19]:

from src.function import Function

class Quadratic(Function):

def eval(self, Q, c, x, gamma):

return 0.5 * x.T.dot(Q).dot(x) + c + gamma

def gradient(self, Q, c, x):

return Q.dot(x) + c

def hessian(self):

pass

Example¶

The parameters will be the following:

\[\varepsilon := 10^{-5}, \delta = 0.01\]Start point will be the following:

\[x^0 := (1, 2, 3, 4)\]The Q matrix will be the following:

\[\begin{split}\begin{bmatrix}1 & 0 & 0 & 0 \\ 0 & 1 & 0 & 0 \\ 0 & 0 & 1 & 0 \\ 0 & 0 & 0 & \delta \end{bmatrix}\end{split}\]

[20]:

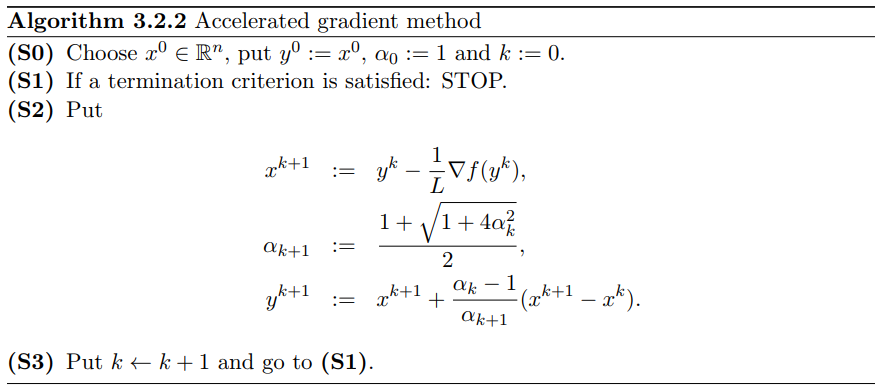

from src.optimizers.fast_gradient_method import FastGradientMethod

objective = Quadratic()

starting_point = np.array([1, 2, 3, 4])

delta = 0.01

epsilon = 0.00001

estimates = list()

iterations = list()

optimizer = FastGradientMethod()

x = optimizer.optimize(starting_point,

objective,

epsilon,

delta)

print(f'Optimal Point: {x}')

print(f'Iterations: {optimizer.iterations}')

Optimal Point: [ -1. -1. -1. -100.00082264]

Iterations: 711